MTE Documentation

MTE tool

The Music Theory Environment (MTE) is an effort to manipulate higher dimensional concepts of music through a software visualization tool. This is based on the premise that what we are currently using for musical staff representation is an instantiation of a higher dimensional musical idea. The concept of condensed musical representation is well known among musicians because they can quite easily create music together based on a condensed musical recipe. For example, at a jazz jam session the musicians will agree on what key to play in and what progression (12 bar blues in E). Already the musicians will know what notes are appropriate for a given 12 chord cycle and any notes played outside that range will need justification. Usually building a bridge to the more “outside” notes.

As an analogy can think of the music notes being used in computer science terms as binary data, where we can have any combination of manipulations done by a Turing Machine to represent any higher dimensional ideas. So binary data is to assembly language as notes are to chords. Furthermore, we can infer that binary data is to c++ as notes are to chord progressions.

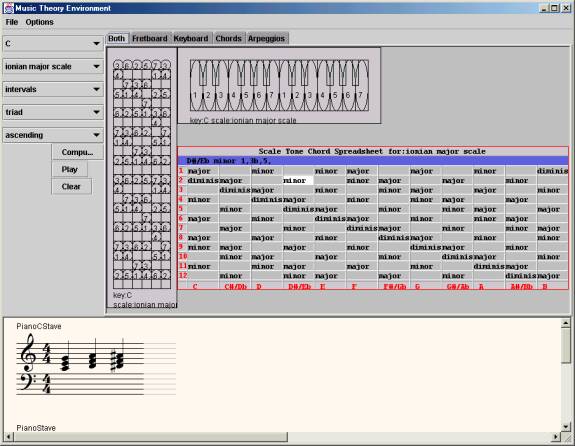

Currently the MTE tool provides a master console with access to various types of higher dimensional note manipulation in the form of chords, scales, arpeggios. These are represented in fretboard and piano keyboard views.

Navigating the console:

Step 1: Musical Database Generation

The left column will create intervals and scales and arpeggios according to given parameters. The initial state of the console is one in which all scale tone chords are generated in all keys for a basic triad; the “chord spreadsheet” and “arpeggio spreadsheet”’s are generated in this way. This produces data in the tool view on the right.

Step 2:

A subdivision of the console is the generated database view of the scale tone chords and arpeggio chords. Picking entries from the arpeggio spreadsheet or “chord spreadsheet” will generate notes in the musical staff window below.

Step 3:

Customize the notes in the musical staff window and play them.

Transcriber Tool

The transcriber is a java based audio tool to transcribe PCM audio into musical notation. This falls under the general class of tools known as Computational Audio Scene Analysis. Also the techniques used delve into the digital audio signal processing field. The transcription of audio into musical notation is a hard problem because of the following:

1)

we are trying to isolate a single note (frequency) from a

series of notes (frequencies).

This can be done using several “pitch tracking” techniques which have

tradeoffs.

a. Statistical autocorrelation of the signal

b. Fast fourier transform – just gives frequencies, we still have harmonics

c. Spestrum – 2 applications of the FFT to find isolate the fundamental tone from its harmonics

2) we don’t fully understand the characteristics of different instruments to isolate or identify them successfully

a. concepts of timbre

Internal concepts

What follows is a discussion of the major system components.

Input audio stream

I didn’t want to rebuild the wheel here, so I used the audio input tool from the Sun example source code with the JDK 1.1 distribution:

Pitch Tracking

Again I didn’t want to rebuild the wheel, so I used the spectrum analyzer tool from:

Which implements a Fast Fourier Transform (FFT).

Pitch to Note mapping

This was actually a part which required some construction and research. The best explanation I found was from www.physlink.com.

We are using the “equal tempered” scale which is constructed by squaring the frequency to reach the frequency of the next octave. We divide the octave into 12 notes, so we use the 12th root of two as our step.

We needed some constants to map between the frequency domain and the midi domain:

Midi range is 0-127

Middle c is midi value of 60.

A4 is midi value of 87.

Frequency of a4 is 440 Hz.

So here is our general formula for mapping frequencies to midi notes:

example: given freq of 129 hz, what is the midi value?

midi of a4 – (midi offset from a4) = midi note

midi of a4 – 21 = midi note

87 –21 = midi note

66 = midi note

Implementation:

Problem: we only have log base 10 in some math libraries and natural logarithm in the java math library.

if we had log base 2:

![]()

due to additive rule of exponents:

![]()

since we only have base 10:

![]()

compute the log using a calculator:

![]()

since we only have natural log wich is base e:

![]()

compute the log using a calculator:

![]()

So we use this as a constant. 17.31234 as our log base e conversion factor.

Note aggregation

This is a part which condenses data into similar sized units.

The problem is that data comes out of the fft every x samples, so a note may be spread out over several reports of the FFT. We should take these reports and align them into musical notation sized time units (quarter note, whole note etc.)

Note display (staff)

This is the third reused component, from MTE, which in turn came from JavaMusic:

Parsing musical notation is a difficult problem due to the number of exceptions which must take place when displaying the information.